In recent times, using containers for development has become incredibly popular. Containers make it easy to break complex monolithic applications into smaller, modular microservices. They are easy to scale and make for consistent environments irrespective of the operating system they are being hosted on.

If you’re working with containers, it is almost impossible to accomplish the tasks without using Kubernetes. Though there are quite a few alternatives to Kubernetes in the market, such as Docker Swarm, Nomad, and Rancher, Kubernetes is the most popular tool used by developers for container orchestration. It is also focused well on Docker, which is another common tool used by developers for delivering the containers.

What is Kubernetes?

“Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation”, says Kubernetes.io. Kubernetes is a rapidly growing ecosystem today with widely available services, support, and tools.

The term ‘Kubernetes’ originated from the Greek language, where it means a helmsman or a pilot. Kubernetes was born at Google, where the project was open-sourced back in 2014. It was a result of over 15 years of Google’s own experience running production workloads at scale with incredible ideas and practices in place in the Google community.

What does Kubernetes do?

In a production environment, containers need to be managed effectively to ensure there is no downtime. So, if a container stops working, another one needs to start, and the other containers should not be affected. And it would be so much easier if there was a system that could handle all this. That’s what Kubernetes does. Kubernetes provides a framework to run distributed systems resiliently. It handles scaling and failover of the applications, and it provides deployment patterns.

Let’s go over the different things Kubernetes can do:

- Service discovery and load balancing: Kubernetes exposes containers using the DNS or their own IP address. Based on the traffic coming to respective containers, Kubernetes balances the loads and distributes the network traffic. It ensures that the deployments remain stable at all times.

- Storage orchestration: Using Kubernetes, one can automatically mount storage systems like local storages, public clouds, etc.

- Automated rollouts and rollbacks: The desired state for deployed containers can be described using Kubernetes. It can help change the actual state of the containers to the desires state accordingly. Kubernetes can be automated to create new containers for deployment while removing the existing containers and deploying the resources to the new containers.

- Automated bin packing: Kubernetes can run containerized tasks using a cluster of nodes and optimally utilize the resources at hand like CPU and RAM. You can define your requirements and how many resources you want to allocate to each container and Kubernetes will optimize everything accordingly.

- Self-healing properties: When a container fails, Kubernetes can restart them or replace them or even kill the containers that have turned non-responsive. It manages the system effectively and ensures the users have a smooth experience.

- Secret and configuration management: Kubernetes stores and manages sensitive information such as passwords and SSH keys. Secrets and application configurations can be updated using Kubernetes without rebuilding the container images or exposing any of the secrets present in the stack configuration.

What are the different components of Kubernetes?

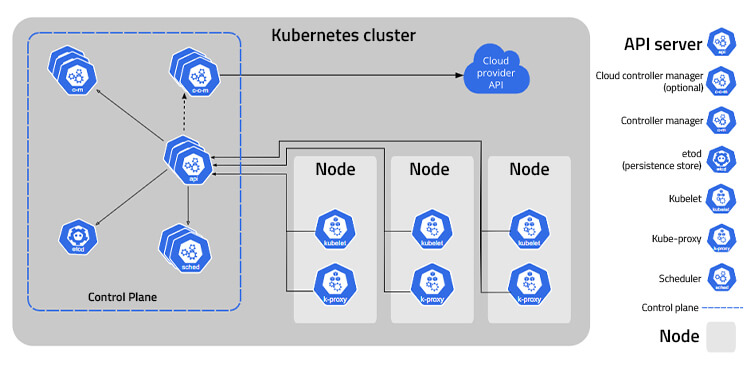

When Kubernetes is deployed, it forms a cluster. A Kubernetes cluster contains a set of worker machines. These worker machines are called nodes, and a bundle of nodes is called a cluster. These nodes run the containerized applications. They host the pods that form the different components of the workload. The worker nodes and the pods are managed by a control plane. This control plane works across multiple computer systems in a production environment. A cluster in turn runs multiple nodes that give the system features like fault-tolerance and high availability.

How to begin using Kubernetes?

Installation

The first step in beginning to use Kubernetes is to install it on your system. When you install Kubernetes on your system, you would need to choose the type of installation you want. This choice could be based on multiple factors like:

- Ease of maintenance

- Security

- Control

- Available resources

- Expertise at hand for operation

Deployment

Kubernetes can be deployed on local machines, clouds, on-premise data centers, or on specific managed Kubernetes clusters. Based on your requirements, you can choose where you would like to deploy your Kubernetes.

Kubernetes Environments

- Learning EnvironmentThis is the environment to go for, as the name suggests when you are learning Kubernetes. In this environment, you can take the help of a wide range of tools offered by the Kubernetes community. There could also be tools available in the ecosystem that would help you set up a Kubernetes cluster on a local machine. In the learning environment, you can install Kubernetes with Minikube or with Kind. Kubernetes can be run very easily when one uses Minikube. Minikube can run a single-node Kubernetes cluster inside a Virtual Machine on a laptop. Minikube is an excellent tool for people who trying out Kubernetes and developing with it on a day-to-day basis. On the other hand, Kind is a tool that helps run local Kubernetes clusters using Docker container nodes.

- Production EnvironmentThis is the environment you use, as the name suggests, for production, once you have completed Kubernetes training in the learning phase and are well-versed with Kubernetes. This environment involves more complex concepts like container runtimes, turnkey cloud solutions and even running Windows on Kubernetes. In the production environment, one can install Kubernetes with deployment tools like kops or Kubespray.

In this way, one can get started with learning and using Kubernetes. However, to be completely ready to use Kubernetes for different container orchestration requirements, one needs thorough Kubernetes training and hands-on practice. One needs to understand a wide range of concepts like containers, cluster architecture, workloads, services, load balancing, networking, storage, configuration, container security, etc. to work well with Kubernetes. To learn everything right, it is recommended to enroll for a thorough professional Kubernetes training.

Cognixia – World’s leading digital talent transformation company offers an intensive Docker and Kubernetes Bootcamp that can help Kubernetes enthusiasts to cross the bridge from being a novice to being a master of working with Kubernetes. This Kubernetes training covers basic-to-advanced level concepts of Docker and Kubernetes. This Kubernetes certification course covers all the important concepts, such as:

- Fundamentals of Docker

- Fundamentals of Kubernetes

- Running Kubernetes instances on Minikube

- Creating and working with Kubernetes clusters

- Working with resources

- Creating and modifying workloads

- Working with Kubernetes API and key metadata

- Working with specialized workloads

- Scaling deployments and application security

- Understanding the container ecosystem

To be eligible for this Docker and Kubernetes training and certification course, one needs to have a basic command knowledge of Linux, and a fundamental understanding of DevOps and YAML programming language. This Kubernetes training is designed with multiple hands-on exercises and projects as part of the curriculum to ensure every participant gets a thorough understanding of every concept and skill. At the end of the training, one also gets a globally recognized Docker and Kubernetes certification that would add immense value to one’s resume. To know more about this Docker and Kubernetes training, visit www.cognixia.com.