There has been a lot of contemplation and ambiguity around this question. Companies have been rendered clueless when it comes to deciding between the Hadoop and Spark frameworks. To some extent, Spark has managed to lead the race as an active open source Big Data project. You cannot draw any direct comparisons between the two frameworks. Though there are a lot of similarities between Hadoop and Spark when it comes to usage.

Given the times, it has become quite important to share informative data on “Spark versus Hadoop” every now and then. I am writing this blog to share some of the significant similarities and differences between Spark and Hadoop frameworks.

Hadoop and Spark, as we all know, are both Big Data frameworks. Both of them carry some of the most prominent tools which are used in executing common Big Data-related tasks.

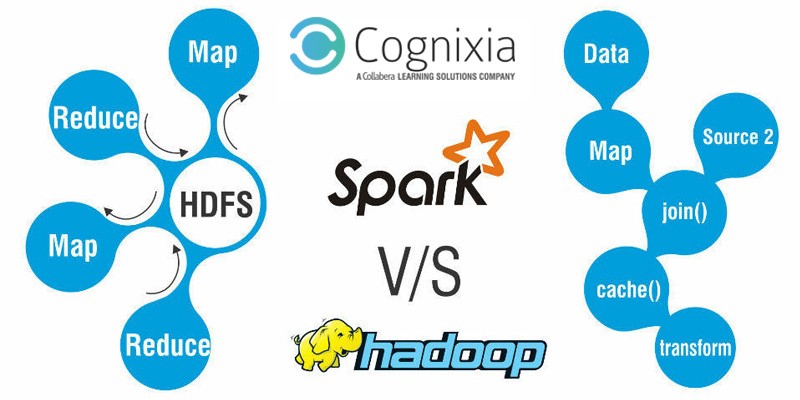

Hadoop had been in the front of the race by being the leading open source Big Data framework until Apache Spark (a more advanced framework) came along. It didn’t take too long for Spark to gain popularity between the two Apache Software Foundation tools. This being mentioned one should also understand that these frameworks do not perform similar tasks and neither are they mutually exclusive hence they have the ability to work together. Although Spark is capable of working at speeds which are 100 times faster than Hadoop but Spark does not provide its own distributed storage system.

A large number of Big Data projects which we come across in today’s day and age have the distributed storage as an important factor. This cannot be sidelined for a simple reason that it allows large volumes (multi-petabytes) of data to get stored across numerous traditional hard drives. This step is important to eliminate the requirement of expensive custom-made machines which have the capacity to hold the data in one place. The scalability factor of these systems is quite high which simply means that you can add extra drives as per the need and increase the size of datasets.

As discussed earlier Spark doesn’t have the feature of organizing files in a distributed manner which leaves it to the mercy of third parties to get this done. Thus largely Spark is seen to be installed over Hadoop hence allowing Spark’s superior analytics applications to use stored data with the help of HDFS (Hadoop Distributed File System).

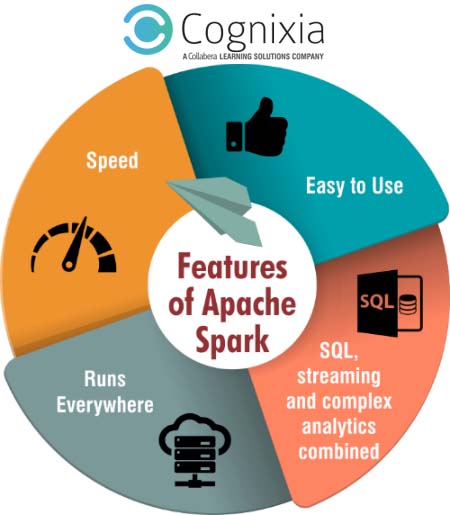

It is the speed at which Spark executes the processing work which gives it a massive lead. The handling majority of the operations “in memory” – which is copying them from the distributed physical storage into a comparatively faster RAM memory is how Spark works. This lessens the time required to write and read to and from slower, clumsy mechanical hard disks which are usually the case with Hadoop’s MapR system.

Once the operation is done completely MapR transcribes all the data back to a physical storage medium. This is done in order to have a backup option in place in times of distress. Another reason for carrying out this step is that the data stored in RAM is always more vulnerable to risks as compared to magnetic data stored on disks. This is where RDDs of Resilient Distributed Datasets helps in recovering data in cases of failure.

Spark has the ability to handle advanced data processing in a much efficient manner than Hadoop. When this is combined with high in-memory operations speeds the popularity of Spark increases even further. Real-time processing is referred to the feeding of data to an analytical application soon after it is captured and the outcomes are shared with the users through a console and hence allowing immediate action. This is one type of processing which can be witnessed in all kinds of Big Data Applications these days. Some of the most common examples of this kind of processing are recommendation engines used by retailers or monitoring the performance of industrial machinery in manufacturing.

The only reason behind Hadoop and Spark being touted as competitors of each other is their mutual existence. The case, however, is not such. We cannot deny certain overlapping factors between the two but apart from that as both the frameworks are non-commercial products there is actually no race at all. Leaving aside the companies which make money by providing support and installation services for these non-commercial frameworks, others will be usually found providing both thus enabling the customers to decide which functions do they require from either of the frameworks.

From this, we can infer that it is the consumer’s demand, at the end of the day, which decides which framework should be opted for. Companies have been using Hadoop to tackle their big data issues for long and now even Spark has gained popularity. This is the reason behind a constant craving for Hadoop/Spark skilled professionals in the industry. Plus you do not require a college degree to become skilled in either of these frameworks.

Cognixia provides great training programs on Hadoop and Spark technologies and prepares the participants on the cluster with help of meticulously laid down course content and subject matter experts who deliver the training in a comprehensive manner. For further information.