A conversation about Big Data cannot be completed without mentioning Hadoop and Apache Spark. These are two very critical frameworks around which most of the Big Data Analytics revolve. By being two effective tools in Big Data, there’s bound to be the debate about which one is better. Both these frameworks have their own set of advantages and function in a different manner from each other. This post throws light on Hadoop and Spark as two different frameworks citing the dissimilarities in their ways of working on big data.

What is Apache Spark?

Apache Spark is a framework which has been created to perform general data analytics on a distributed computing cluster similar to Hadoop. This framework provides in-memory calculations/ computations for the speed increase and data process over MapR. Spark runs on top of an existing Hadoop cluster and accesses the Hadoop Data Store (HDFS). The framework is also capable of processing structured data in Hive and streaming data from various platforms like HDFS, Flume, Kafka and Twitter.

Apache Spark to replace Hadoop? – A significant Question

Hadoop is a corresponding data processing framework which has conventionally been used in running MapR jobs. The complete running time of these jobs might take from minutes to hours. Apache has created Spark in order to run on top of Hadoop; thus, providing an alternative to the usual batch MapR model. This is used for real-time stream data processing and increasing the speeds of interactive queries, hence resolving them within seconds. This evidently shows that Hadoop supports both frameworks – the conventional MapR and contemporary Spark.

With this information at hand, we can arrive to a conclusion that Hadoop acts as a general purpose framework supporting various models whereas Spark is more of an alternative of Hadoop MapR rather being a replacement of Hadoop framework.

Choosing between Hadoop MapR and Apache Spark

Spark requires a higher RAM in place of a network and disk I/O. In terms of speed, Spark is faster than Hadoop. However, using large RAM makes it necessary to have a dedicated and expensive server in order to get effective results. All this is dependent on various factors and these factors which influence the decisions keep changing dynamically with time.

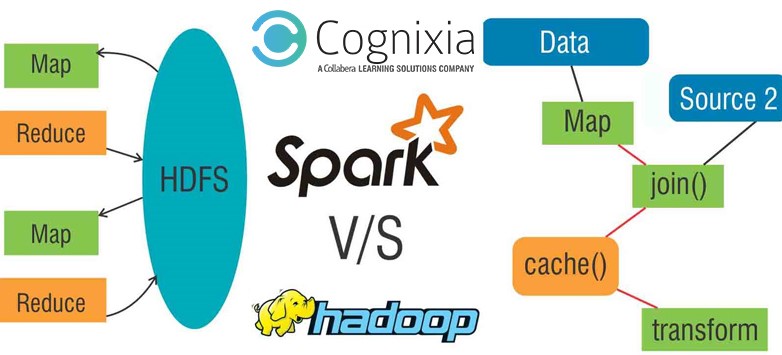

Hadoop MapR v/s Apache Spark

There are certain differences between Hadoop MapR and Apache Spark which one needs to understand. First and foremost is the difference in the ways these two platforms store data – Spark does it in-memory while Hadoop stores the data on disk. Replication of data to achieve fault tolerance is what Hadoop puts to use. On the other hand, Spark puts different data storage model to use – RDD or resilient distributed datasets are a smart way of assuring fault tolerance which leads to the minimized network I/O.

A tried and tested method to achieve fault tolerance is used by RDDs. For example – if a partition of an RDD is compromised, then that RDD would have ample information to build that particular partition again. This eliminates the need to replicate data for achieving fault tolerance.

Is learning Hadoop necessary before learning Spark?

No, it is not necessary to learn Hadoop to learn or understand Spark. Spark was designed as an independent project. Spark became even more popular after YARN and Hadoop 2.0 because of its ability to run on top of HDFS and various other Hadoop components. Spark has acquired a place as a significant data processing framework in the Hadoop ecosystem which has proven beneficial for businesses and community as it lends the Hadoop stack an increased capability.

But from a developer’s perspective; there’s very little or no commonality between Hadoop and Spark. Wherein the Hadoop ecosystem, you use Java to write MapR jobs, Spark is actually a library which allows parallel computation through function calls.

For operators to keep the cluster running, an overlap in general skills can be seen, like monitoring, configuration and deployment of code.

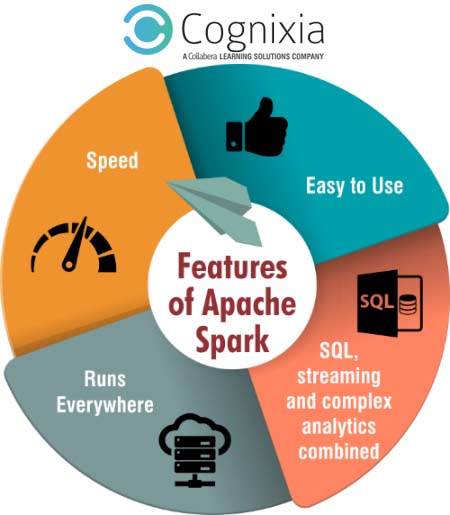

Features of Apache Spark

There are certain features in Apache Spark which have made it popular in the world of Big Data:

- Speed – Apache Spark is comparatively much faster than the Hadoop framework. When compared with Hadoop, Spark has the capability to function hundred times faster in-memory and ten times faster on disk. The major time-consuming factors in data processing are read and write to disk which is reduced considerably by using Spark.

- Easy to Use – Using programming languages like Java, Scala or Python enable you to write applications quickly. This gives a chance for developers to create and run applications in languages they are well-versed with. Spark comes with a set of eighty high-level operators which also enables these developers to make parallel applications

- SQL, streaming and complex analytics combined – Spark not only supports the simple MapR operations but also SQL queries, streaming data and complex analytics like machine learning and graph algorithms. Besides this, Spark also allows its users to blend these capabilities in a single workflow.

- Runs Everywhere – Spark has the capability of running on Hadoop, standalone, or even in the cloud. It also has the access to various data sources like HDFS, Cassandra, HBase and S3 etc.

Get Started on Apache Spark

Spark is very easy to learn and one can quickly understand its nuances to write Big Data applications. Your existing Hadoop and programming skills will come in handy while understanding Spark. Getting skilled on Apache Spark would enable you to address your data queries at a much faster rate.

At Cognixia, we offer various Big Data training. From Hadoop Administration to Hadoop Developer to Apache Spark & Storm and Big Data Training, Cognixia provides a host of programs on these technologies which can usher you into endless career opportunities. For further information, you can write to us